Ideas on Scaling up Security Architecture for Deploying Web Apps

Let us take a case of a mid sized company which wants to scale up its security architecture for it’s client facing website. As per the terms of reference, there should be minimum disruption in the existing workflow and any kind of code rewrite is not on the platter. They have a classic deployment in which the website is hosted on Virtual Private Servers (VPSs).

We can have a two pronged strategy here, first to minimize the attack surface and then to monitor the traffic that is allowed to come in. This can be done by limiting access to hosted infrastructure (VPS) by dropping all the traffic at ingress except that for

httpsor port443. We also need to limit the access to web APIs in such a way that, the internal APIs are not exposed to outside world and all incoming traffic on the exposed public APIs is being monitored.

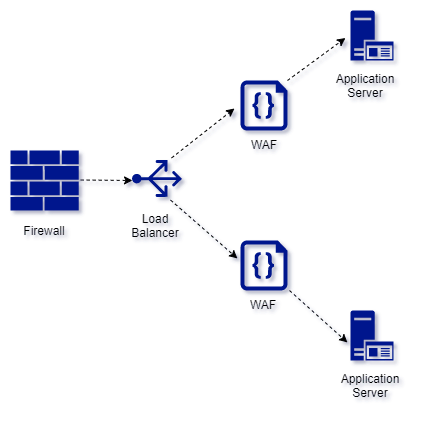

Now, having worked out the design goals, we can rework the architecture of our deployment. Of course there are many ways to go about the security architecture, but one of the ways is to enable/introduce following artifacts.

- Infrastructure Firewall

- Proxy / Load balancer

- Web Application Firewall

Infrastructure Firewall

The infrastructure firewall will drop all traffic at ingress of our VPS except that meant for https and http. We can also configure the firewall to limit the traffic from specific IPs/subnets. Some of the sample rules in our firewalld of CentOS7 as given here:

Firewalld Rules

# Step 1: Create a trusted Zone having whitelisted IP / subnet.

firewall-cmd --permanent --add-source=10.10.10.10 --zone=trusted &

# Step 2: Add service http and https to the trusted zone

firewall-cmd --permanent --add-service=http --zone=trusted &

firewall-cmd --permanent --add-service=https --zone=trusted &

# Step 3: Remove service http and https from the public zone

firewall-cmd --permanent --remove-service=http --zone=public &

firewall-cmd --permanent --remove-service=https --zone=public &

firewall-cmd --reload

Proxy / Load balancer

The proxy will ensure that our website is not directly exposed to the outside world and has additional benefits of load balancing. We can setup Access Control Lists (ACLs) so that only specific endpoints of our website APIs are accessible from outside. This will also safeguard any accidental exposure of our internal APIs. Some of the sample configuration settings for the HAproxy could be as follows:

HAProxy Config Rules

frontend httpsandhttp

bind *:80

bind *:443 ssl crt /etc/ssl/haproxy.pem

# Redirect traffic to https

http-request redirect scheme https unless { ssl_fc }

mode http

acl app1 path_end -i /app1

acl app2 path_end -i /app2

use_backend app1Servers if app1

use_backend app2Servers if app2

backend app1Servers

balance roundrobin

mode http

server webserver1 127.0.0.1:2222 check weight 1 maxconn 50 ssl verify none

backend app2Servers

balance roundrobin

mode http

server webserver2 127.0.0.1:3333 check weight 1 maxconn 50 ssl verify none

Web Application Firewall

The traffic from our proxy now goes through the Web Application Firewall. This is configured to thwart common web application vulnerabilities. We could use the open source WAF like modsecurity or vendor dependent one. The WAFs also have the settings to configure it as proxy for sending the actual website traffic. Thus both proxy and WAF can be bundled in one software. We can run everything in a containerised environment using docker or Kubernetes.

Reference

- https://www.youtube.com/watch?v=qYnA2DFEELw

- https://www.haproxy.com/blog/redirect-http-to-https-with-haproxy/